Imagine teaching a computer to ‘see’ and understand the world around it in three dimensions. That’s the challenge of RGB-D semantic segmentation, where computers analyze images to identify and label different objects in a scene, like walls, furniture, and people. Researchers at Universiti Teknologi Malaysia (UTM) are pioneering a new approach to significantly improve the accuracy and reliability of this technology.

Existing methods often struggle with accurately fusing information from both regular color images (RGB) and depth maps (D), leading to errors and inconsistencies in the final object labels. The UTM team recognized that simply combining features from the two data streams is not enough. They drew inspiration from successful image processing techniques that use a ‘pixel-node-pixel’ pipeline. To solve the issue of misalignment between the RGB and depth data, they proposed a method where the RGB images guide the integration of geometric information from the depth maps. This is achieved through what’s called ‘late fusion,’ a method which strategically combines data at a later stage of processing to minimize early errors.

But they didn’t stop there. The team realized that traditional convolutional neural networks (CNNs), often used to process images, aren’t ideal for handling depth maps. Therefore, they transformed the depth data into a “normal map,” which highlights surface orientations, making it easier for CNNs to extract meaningful information about the 3D structure of objects.

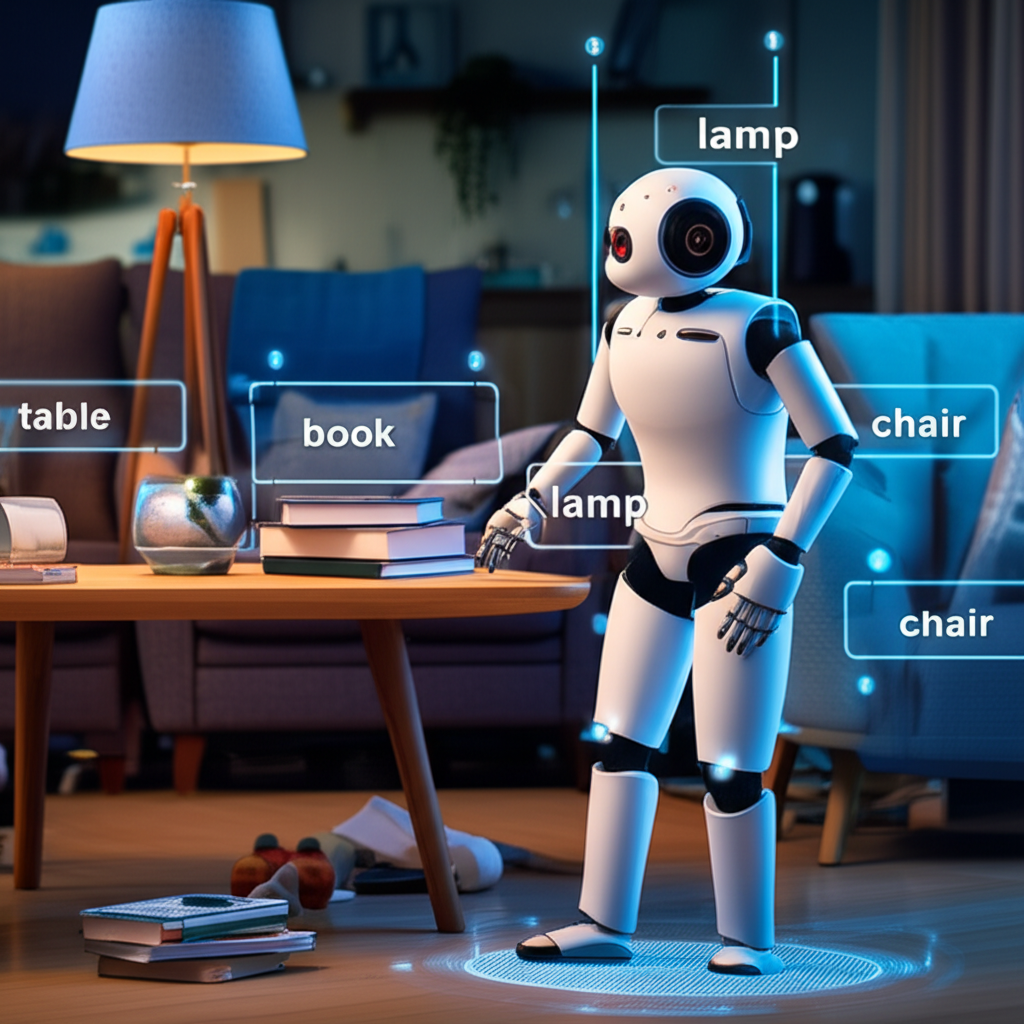

To further refine the segmentation, the UTM researchers employed Graph Neural Networks (GNNs). GNNs are excellent at capturing relationships between different parts of an image. By applying GNNs, the system can consider how objects relate to each other within a scene, reducing errors and creating more coherent and accurate segmentations. Think of it as the computer understanding that a chair is more likely to be found next to a table than floating in mid-air.

Finally, the researchers addressed issues related to how the system assigns pixels to different regions. They developed a novel approach using Kullback-Leibler Loss to ensure that important pixel features aren’t missed, along with a method to connect regions that are both spatially close and semantically similar. This helps the system to better understand the context and relationships between different objects in the scene.

The impact of this research is significant. More accurate and reliable RGB-D semantic segmentation can have profound implications for a variety of applications, including robotics, augmented reality, autonomous driving, and even medical imaging. For instance, a robot equipped with this technology could more easily navigate a complex environment or assist in surgery. The next steps involve further refining the algorithms and testing them in real-world scenarios. This research from UTM brings us closer to a future where computers can truly ‘see’ and understand the world around us.

DOI: https://doi.org/10.48550/arXiv.2501.18851